Полносвязная сеть ReLU с одним скрытым слоем и без смещений, обученная предсказывать y по x путем минимизации квадрата евклидова расстояния.

Эта реализация вычисляет прямой проход, используя операции с переменными PyTorch, и использует автоградацию PyTorch для вычисления градиентов.

Переменная PyTorch представляет собой оболочку вокруг тензора PyTorch и представляет узел в вычислительном графе. Если x — переменная, то x.data — это тензор, задающий свое значение, а x.grad — другая переменная, содержащая градиент x по отношению к некоторому скалярному значению.

PyTorch Variables имеют тот же API, что и тензоры PyTorch: (почти) любую операцию, которую вы можете выполнять с тензором, вы также можете выполнять с переменной; разница в том, что autograd позволяет автоматически вычислять градиенты.

**************

Code 1

**************

(.env) [boris@fedora34server PYTORCH]$ cat pyTorch1.py

import torch

from torch.autograd import Variable

dtype = torch.FloatTensor

# dtype = torch.cuda.FloatTensor # Uncomment this to run on GPU

# N is batch size; D_in is input dimension;

# H is hidden dimension; D_out is output dimension.

N, D_in, H, D_out = 64, 1000, 100, 10

# Create random Tensors to hold input and outputs, and wrap them in Variables.

# Setting requires_grad=False indicates that we do not need to compute gradients

# with respect to these Variables during the backward pass.

x = Variable(torch.randn(N, D_in).type(dtype), requires_grad=False)

y = Variable(torch.randn(N, D_out).type(dtype), requires_grad=False)

# Create random Tensors for weights, and wrap them in Variables.

# Setting requires_grad=True indicates that we want to compute gradients with

# respect to these Variables during the backward pass.

w1 = Variable(torch.randn(D_in, H).type(dtype), requires_grad=True)

w2 = Variable(torch.randn(H, D_out).type(dtype), requires_grad=True)

learning_rate = 1e-6

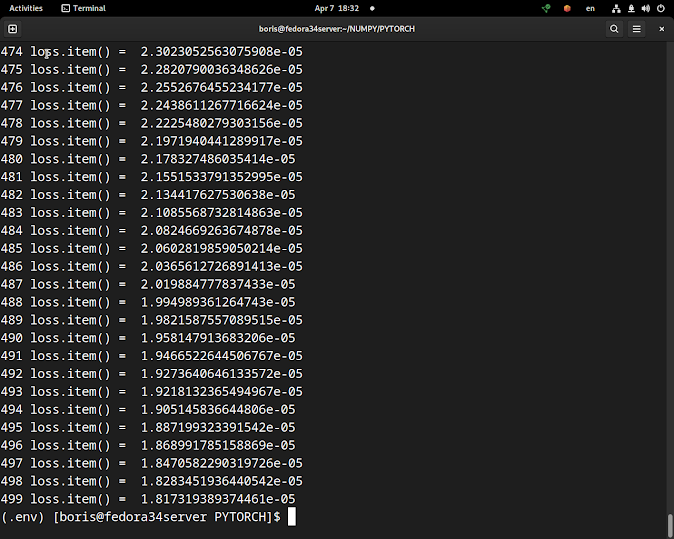

for t in range(500):

# Forward pass: compute predicted y using operations on Variables; these

# are exactly the same operations we used to compute the forward pass using

# Tensors, but we do not need to keep references to intermediate values since

# we are not implementing the backward pass by hand.

y_pred = x.mm(w1).clamp(min=0).mm(w2)

# Compute and print loss using operations on Variables.

loss = (y_pred - y).pow(2).sum()

# Use autograd to compute the backward pass. This call will compute

# the gradient of loss with respect to all Variables with requires_grad=True.

# After this call w1.grad and w2.grad will be Variables holding the gradient

# of the loss with respect to w1 and w2 respectively.

loss.backward()

# Update weights using gradient descent; w1.data and w2.data are Tensors,

# w1.grad and w2.grad are Variables and w1.grad.data and w2.grad.data are

# Tensors.

w1.data -= learning_rate * w1.grad.data

w2.data -= learning_rate * w2.grad.data

print("w1.data = ",w1.data)

print("w1.grad.data = ",w1.grad.data)

# Manually zero the gradients after updating weights

w1.grad.data.zero_()

w2.grad.data.zero_()

No comments:

Post a Comment